目录

1.kubernetets容器资源限制

Kubernetes采用request和limit两种限制类型来对资源进行分配

• request(需求资源):即运行Pod的节点必须满足运行Pod的最基本需求才能运行Pod

• limit(资源限额):即运行Pod期间,可能内存使用量会增加,可以在yaml文件中设定最多能使用多少内存配置资源限额

资源类型:

• CPU的单位是核心数,内存的单位是字节;

• 一个容器申请0.5个CPU,就相当于申请1个CPU的一半,你也可以加个后缀m表示千分之一的概念;比如说100m的CPU,100豪的CPU和0.1个CPU是一样的

内存单位:

• K、M、G、T、P、E #通常以1000为换算标准

• Ki、Mi、Gi、Ti、Pi、Ei #通常以1024为换算标准

1).内存限制

[root@node11 ~]# docker load -i stress.tar [root@node11 harbor]# docker push reg.westos.org/library/stress:latest上传镜像到私有仓库 [root@node22 limit]# vim pod.yaml apiVersion: v1 kind: Pod metadata: name: memory-demo spec: containers: - name: memory-demo image: stress args: - --vm - "1" - --vm-bytes - 200M resources: requests: memory: 50Mi limits: memory: 100Mi [root@node22 limit]# kubectl apply -f pod.yaml pod/memory-demo created [root@node22 limit]# kubectl get pod 运行内存时出现问题 NAME READY STATUS RESTARTS AGE memory-demo 0/1 ContainerCreating 0 17s 超过限制的内存就无法运行

如果容器超过设定的内存限制,则会被终止;如果可重新启动,则与所有其他类型的运行时故障一样,kubelet将重新启动它;如果一个容器超过其内存请求,那么当节点内存不足时,它的Pod可能被逐出

[root@node22 limit]# vim pod.yaml 将最大限制增加到201M apiVersion: v1 kind: Pod metadata: name: memory-demo spec: containers: - name: memory-demo image: stress args: - --vm - "1" - --vm-bytes - 200M resources: requests: memory: 50Mi limits: memory: 201Mi [root@node22 limit]# kubectl apply -f pod.yaml pod/memory-demo created [root@node22 limit]# kubectl get pod NAME READY STATUS RESTARTS AGE memory-demo 1/1 Running 0 7s [root@node22 limit]# kubectl delete -f pod.yaml pod "memory-demo" deleted 2).cpu限制

[root@node22 limit]# vim pod.yaml apiVersion: v1 kind: Pod metadata: name: memory-demo spec: containers: - name: memory-demo image: stress args: - -c - "2" resources: requests: cpu: 5 limits: cpu: 10 [root@node22 limit]# kubectl apply -f pod.yaml pod/memory-demo created [root@node22 limit]# kubectl get pod cpu NAME READY STATUS RESTARTS AGE memory-demo 0/1 Pending 0 6s ##调度失败是因为申请的CPU资源超出集群节点所能提供的资源;但CPU使用率过高,不会被杀死pod [root@node22 limit]# vim pod.yaml 将cpu数量降低一点 apiVersion: v1 kind: Pod metadata: name: memory-demo spec: containers: - name: memory-demo image: stress args: - -c - "2" resources: requests: cpu: 1 limits: cpu: 2 [root@node22 limit]# kubectl apply -f pod.yaml pod/memory-demo created [root@node22 limit]# kubectl get pod NAME READY STATUS RESTARTS AGE memory-demo 1/1 Running 0 3s [root@node22 limit]# kubectl delete -f pod.yaml --force 3).为namespace设置资源限制

[root@node22 limit]# vim limit.yaml apiVersion: v1 kind: LimitRange metadata: name: limitrange-memory spec: limits: - default: cpu: 0.5 memory: 512Mi defaultRequest: cpu: 0.1 memory: 256Mi max: cpu: 1 memory: 1Gi min: cpu: 0.1 memory: 100Mi type: Container [root@node22 limit]# kubectl apply -f limit.yaml limitrange/limitrange-memory created [root@node22 limit]# kubectl get limitranges NAME CREATED AT limitrange-memory 2022-09-03T15:55:19Z [root@node22 limit]# kubectl describe limitranges Name: limitrange-memory Namespace: default Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio ---- -------- --- --- --------------- ------------- ----------------------- Container cpu 100m 1 100m 500m - Container memory 100Mi 1Gi 256Mi 512Mi - [root@node22 limit]# kubectl run demo --image=nginx pod/demo created [root@node22 limit]# kubectl describe pod demo Limits: cpu: 500m memory: 512Mi Requests: cpu: 100m memory: 256Mi ##LimitRange在namespace中施加的最小和最大内存限制只有在创建和更新Pod时才会被应用,改变LimitRange不会对之前创建的Pod造成影响 [root@node22 limit]# vim pod.yaml apiVersion: v1 kind: Pod metadata: name: memory-demo spec: containers: - name: memory-demo image: nginx resources: requests: cpu: 1 memory: 500Mi limits: cpu: 2 memory: 1Gi [root@node22 limit]# kubectl apply -f pod.yaml cpu指定时最大一个 Error from server (Forbidden): error when creating "pod.yaml": pods "memory-demo" is forbidden: maximum cpu usage per Container is 1, but limit is 2 [root@node22 limit]# kubectl describe limitranges Name: limitrange-memory Namespace: default Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio ---- -------- --- --- --------------- ------------- ----------------------- Container cpu 100m 1 100m 500m - Container memory 100Mi 1Gi 256Mi 512Mi - [root@node22 limit]# vim pod.yaml 把最大限制改为1 apiVersion: v1 kind: Pod metadata: name: memory-demo spec: containers: - name: memory-demo image: nginx resources: requests: cpu: 1 memory: 500Mi limits: cpu: 1 memory: 1Gi [root@node22 limit]# kubectl apply -f pod.yaml pod/memory-demo created 4).为namespace设置资源配额

[root@node22 limit]# vim limit.yaml apiVersion: v1 kind: LimitRange metadata: name: limitrange-memory spec: limits: - default: cpu: 0.5 memory: 512Mi defaultRequest: cpu: 0.1 memory: 256Mi max: cpu: 1 memory: 1Gi min: cpu: 0.1 memory: 100Mi type: Container --- apiVersion: v1 kind: ResourceQuota metadata: name: mem-cpu-demo spec: hard: requests.cpu: "1" requests.memory: 1Gi limits.cpu: "2" limits.memory: 2Gi [root@node22 limit]# kubectl apply -f limit.yaml limitrange/limitrange-memory configured resourcequota/mem-cpu-demo created [root@node22 limit]# kubectl get pod NAME READY STATUS RESTARTS AGE demo 1/1 Running 0 10m memory-demo 1/1 Running 0 4m43s [root@node22 limit]# kubectl describe resourcequotas Name: mem-cpu-demo Namespace: default Resource Used Hard -------- ---- ---- limits.cpu 1500m 2 limits.memory 1536Mi 2Gi requests.cpu 1100m 1 requests.memory 756Mi 1Gi [root@node22 limit]# kubectl delete limitranges limitrange-memory 删除限制 limitrange "limitrange-memory" deleted [root@node22 limit]# kubectl describe limitranges No resources found in default namespace. [root@node22 limit]# kubectl run demo3 --image=nginx 配置完后必须设置限制,否则无法创建 Error from server (Forbidden): pods "demo3" is forbidden: failed quota: mem-cpu-demo: must specify limits.cpu,limits.memory,requests.cpu,requests.memory 创建的ResourceQuota对象将在default名字空间中添加以下限制: • 每个容器必须设置内存请求(memory request),内存限额(memory limit),cpu请求(cpu request)和cpu限额(cpu limit)。 • 所有容器的内存请求总额不得超过1 GiB。 • 所有容器的内存限额总额不得超过2 GiB。 • 所有容器的CPU请求总额不得超过1 CPU。 • 所有容器的CPU限额总额不得超过2 CPU。 5).为 Namespace 配置Pod配额:

[root@node22 limit]# vim limit.yaml apiVersion: v1 kind: LimitRange metadata: name: limitrange-memory spec: limits: - default: cpu: 0.5 memory: 512Mi defaultRequest: cpu: 0.1 memory: 256Mi max: cpu: 1 memory: 1Gi min: cpu: 0.1 memory: 100Mi type: Container --- apiVersion: v1 kind: ResourceQuota metadata: name: mem-cpu-demo spec: hard: requests.cpu: "1" requests.memory: 1Gi limits.cpu: "2" limits.memory: 2Gi --- apiVersion: v1 kind: ResourceQuota metadata: name: pod-demo spec: hard: pods: "2" [root@node22 limit]# kubectl apply -f limit.yaml limitrange/limitrange-memory configured resourcequota/mem-cpu-demo unchanged resourcequota/pod-demo created [root@node22 limit]# kubectl describe resourcequotas Name: mem-cpu-demo Namespace: default Resource Used Hard -------- ---- ---- limits.cpu 0 2 limits.memory 0 2Gi requests.cpu 0 1 requests.memory 0 1Gi Name: pod-demo Namespace: default Resource Used Hard -------- ---- ---- pods 0 2 [root@node22 limit]# kubectl run demo1 --image=nginx pod/demo1 created [root@node22 limit]# kubectl run demo2 --image=nginx pod/demo2 created [root@node22 limit]# kubectl describe resourcequotas 最多建立两个pod Name: mem-cpu-demo Namespace: default Resource Used Hard -------- ---- ---- limits.cpu 1 2 limits.memory 1Gi 2Gi requests.cpu 200m 1 requests.memory 512Mi 1Gi Name: pod-demo Namespace: default Resource Used Hard -------- ---- ---- pods 2 2 [root@node22 limit]# kubectl run demo3 --image=nginx Error from server (Forbidden): pods "demo3" is forbidden: exceeded quota: pod-demo, requested: pods=1, used: pods=2, limited: pods=2 [root@node22 limit]# kubectl delete -f limit.yaml limitrange "limitrange-memory" deleted resourcequota "mem-cpu-demo" deleted resourcequota "pod-demo" deleted [root@node22 limit]# kubectl delete pod --all pod "demo1" deleted pod "demo2" deleted 2.kubernetes资源监控

1).Metrics-Ser ver部署

Metrics-Server是集群核心监控数据的聚合器,用来替换之前的heapster。

容器相关的 Metrics 主要来自于 kubelet 内置的 cAdvisor 服务,有了Metrics[1]

Server之后,用户就可以通过标准的 Kubernetes API 来访问到这些监控数据。

• Metrics API 只可以查询当前的度量数据,并不保存历史数据。

• Metrics API URI 为 /apis/metrics.k8s.io/,在 k8s.io/metrics 维护。

• 必须部署 metrics-server 才能使用该 API,metrics-server 通过调用 Kubelet Summary

API 获取数据。

示例:

• http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes

• http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/nodes/<node-name>

• http://127.0.0.1:8001/apis/metrics.k8s.io/v1beta1/namespace/<namespace[1]

name>/pods/<pod-name>

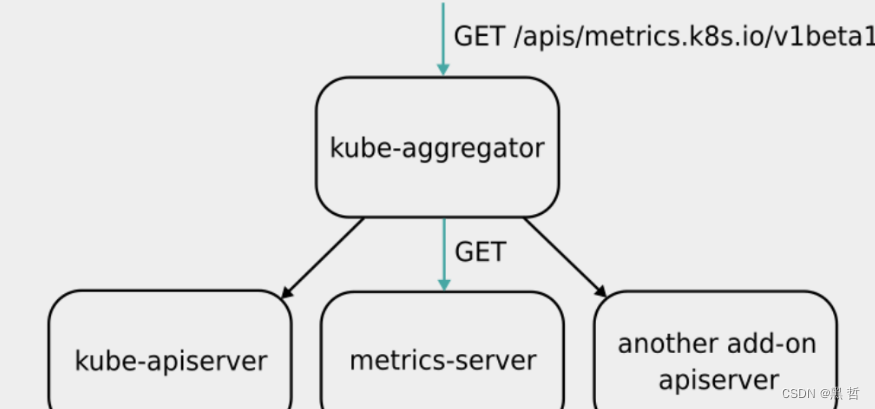

Metrics Server并不是kube-apiserver的一部分,而是通过Aggregator这种插件机制,在独立部署的情况下同kube-apiserver一起统一对外服务的

kube-aggregator其实就是一个根据URL选择具体的API后端的代理服务器

Metrics-server属于Core metrics(核心指标),提供API metrics.k8s.io,仅提供Node和Pod的CPU和内存使用情况,而其他Custom Metrics(自定义指标)由Prometheus等组件来完成

Metrics-server部署:

[root@node22 ~]# mkdir metrics

[root@node22 ~]# cd metrics/

[root@node22 metrics]# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

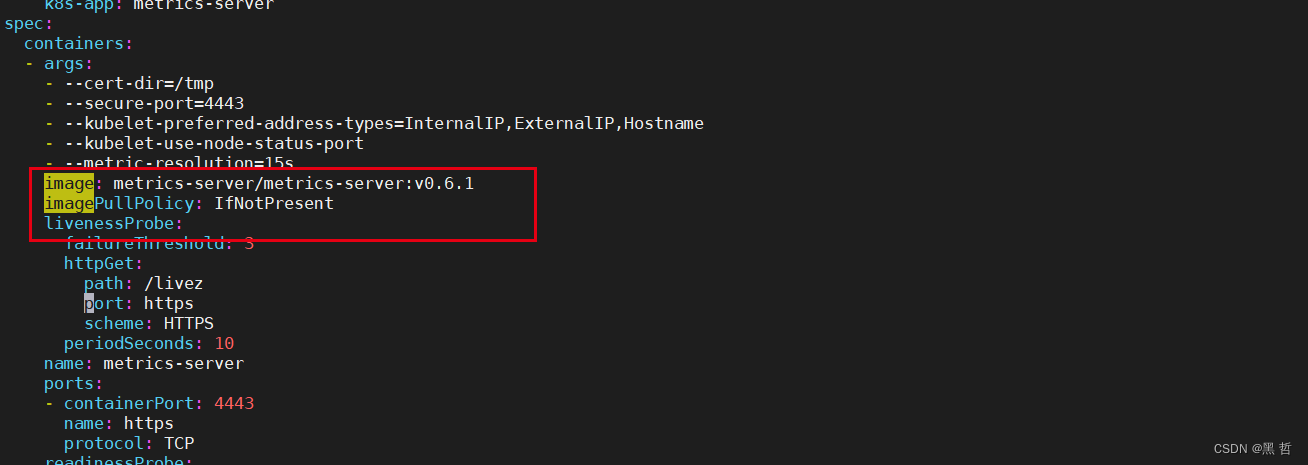

[root@node22 metrics]# vim components.yaml 修改镜像路径

[root@node22 metrics]# kubectl apply -f components.yaml

部署后查看Metrics-server的Pod日志:

1).错误1:dial tcp: lookup server2 on 10.96.0.10:53: no such host

这是因为没有内网的DNS服务器,所以metrics-server无法解析节点名字。可以直接修改

coredns的configmap,讲各个节点的主机名加入到hosts中,这样所有Pod都可以从

CoreDNS中解析各个节点的名字。

• kubectl edit configmap coredns -n kube-system

apiVersion: v1

data:

Corefile: |

...

ready

hosts {

172.25.0.11 server1

172.25.0.12 server2

172.25.0.13 server3

fallthrough

}

kubernetes cluster.local in-addr.arpa ip6.arpa {

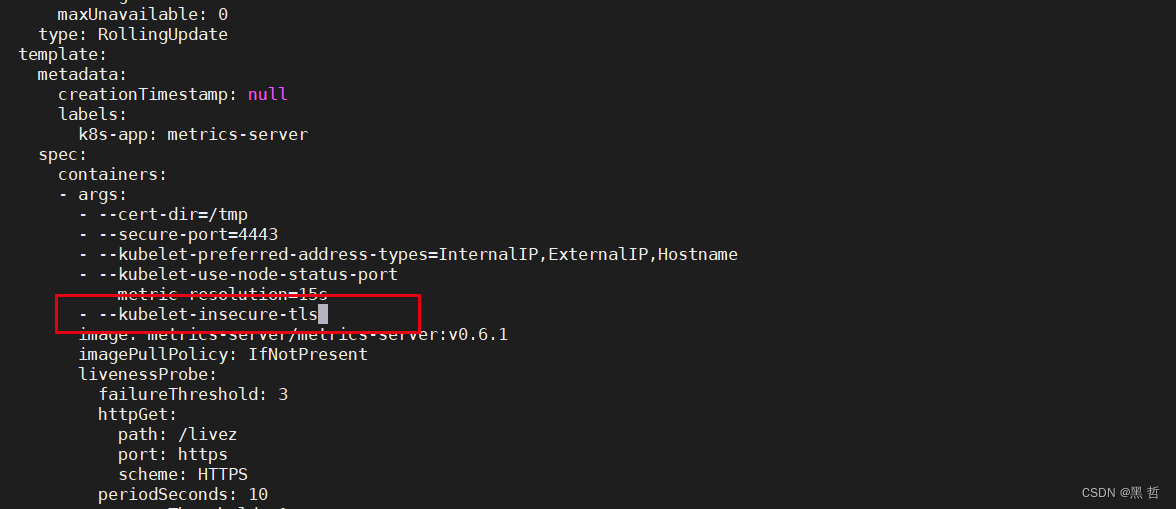

2).报错2:x509: certificate signed by unknown authority

Metric Server 支持一个参数 --kubelet-insecure-tls,可以跳过这一检查,然而官

方也明确说了,这种方式不推荐生产使用。

[root@node22 metrics]# vim components.yaml

[root@node22 metrics]# kubectl apply -f components.yaml

[root@node22 metrics]# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6444b57c6d-h6gcd 1/1 Running 7 (9h ago) 7d

calico-node-jcwvw 1/1 Running 0 6h39m

calico-node-rl8mx 1/1 Running 7 (9h ago) 7d2h

calico-node-xxksv 1/1 Running 5 (9h ago) 7d2h

coredns-7b56f6bc55-2pwnh 1/1 Running 9 (9h ago) 10d

coredns-7b56f6bc55-g458w 1/1 Running 9 (9h ago) 10d

etcd-node22 1/1 Running 9 (9h ago) 10d

kube-apiserver-node22 1/1 Running 8 (9h ago) 9d

kube-controller-manager-node22 1/1 Running 26 (92m ago) 10d

kube-proxy-8qc8h 1/1 Running 7 (9h ago) 9d

kube-proxy-cscgp 1/1 Running 9 (9h ago) 9d

kube-proxy-cz4r9 1/1 Running 0 6h39m

kube-scheduler-node22 1/1 Running 25 (92m ago) 10d

metrics-server-58fc4b6dbd-7dgd4 1/1 Running 0 52s

[root@node22 metrics]# kubectl top pod

No resources found in default namespace.

[root@node22 metrics]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

node22 216m 10% 1211Mi 70%

node33 84m 4% 931Mi 54%

node44 96m 4% 836Mi 48%

启用TLS Bootstrap 证书签发

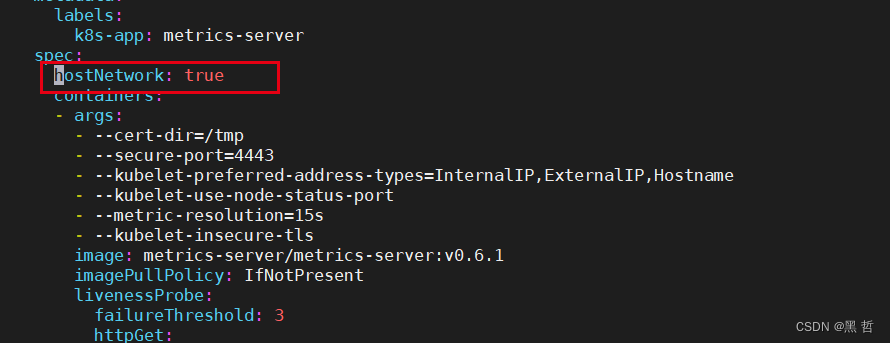

3).报错3: Error from server (ServiceUnavailable): the server is currently unable to

handle the request (get nodes.metrics.k8s.io)

• 如果metrics-server正常启动,没有错误,应该就是网络问题。修改metrics[1]

server的Pod 网络模式:

[root@node22 metrics]# kubectl apply -f components.yaml [root@node22 metrics]# kubectl get pod -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES calico-kube-controllers-6444b57c6d-h6gcd 1/1 Running 7 (9h ago) 7d 10.244.35.149 node22 <none> <none> calico-node-jcwvw 1/1 Running 0 6h49m 192.168.0.44 node44 <none> <none> calico-node-rl8mx 1/1 Running 7 (9h ago) 7d2h 192.168.0.22 node22 <none> <none> calico-node-xxksv 1/1 Running 5 (9h ago) 7d2h 192.168.0.33 node33 <none> <none> coredns-7b56f6bc55-2pwnh 1/1 Running 9 (9h ago) 10d 10.244.35.150 node22 <none> <none> coredns-7b56f6bc55-g458w 1/1 Running 9 (9h ago) 10d 10.244.35.148 node22 <none> <none> etcd-node22 1/1 Running 9 (9h ago) 10d 192.168.0.22 node22 <none> <none> kube-apiserver-node22 1/1 Running 8 (9h ago) 9d 192.168.0.22 node22 <none> <none> kube-controller-manager-node22 1/1 Running 26 (101m ago) 10d 192.168.0.22 node22 <none> <none> kube-proxy-8qc8h 1/1 Running 7 (9h ago) 9d 192.168.0.33 node33 <none> <none> kube-proxy-cscgp 1/1 Running 9 (9h ago) 9d 192.168.0.22 node22 <none> <none> kube-proxy-cz4r9 1/1 Running 0 6h49m 192.168.0.44 node44 <none> <none> kube-scheduler-node22 1/1 Running 25 (102m ago) 10d 192.168.0.22 node22 <none> <none> metrics-server-7c77876544-zbz96 1/1 Running 0 37s 192.168.0.44 node44 <none> <none> 4).Dashboard

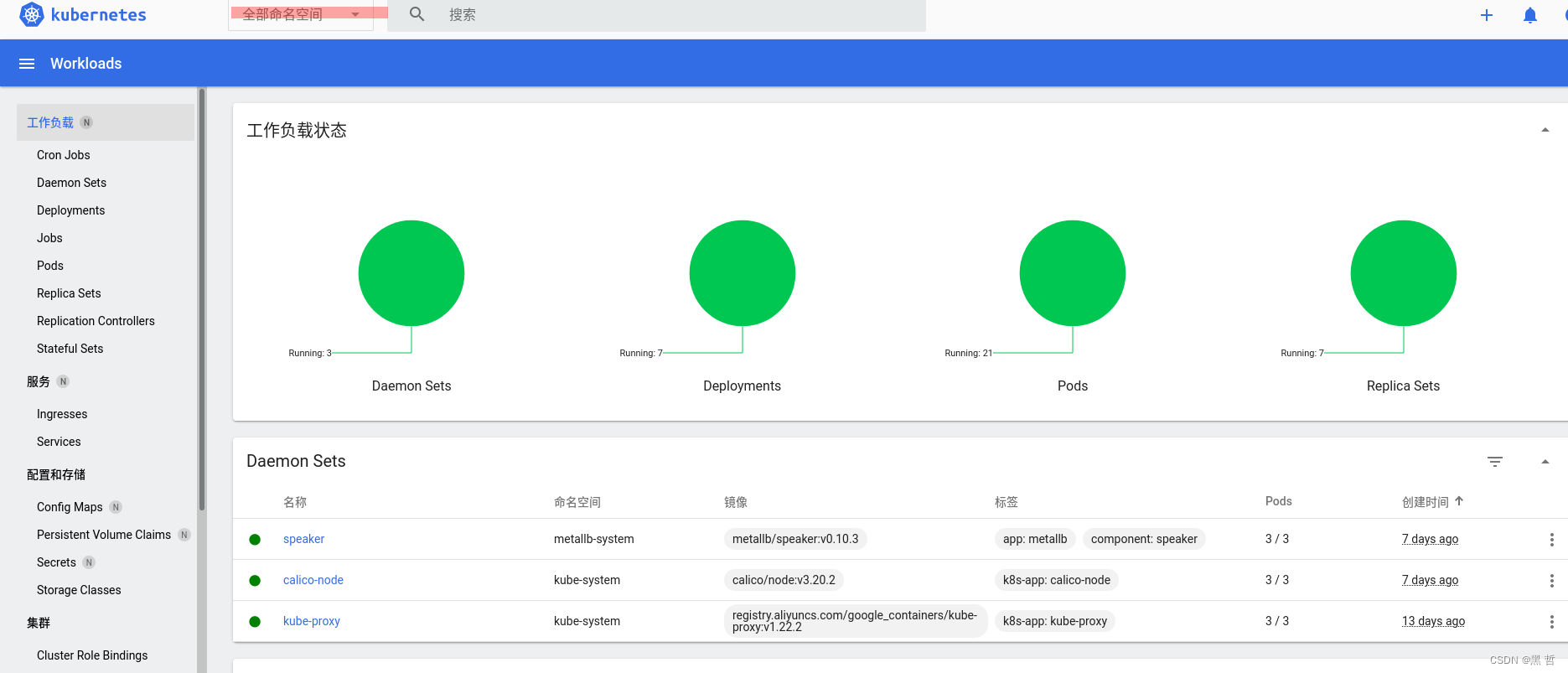

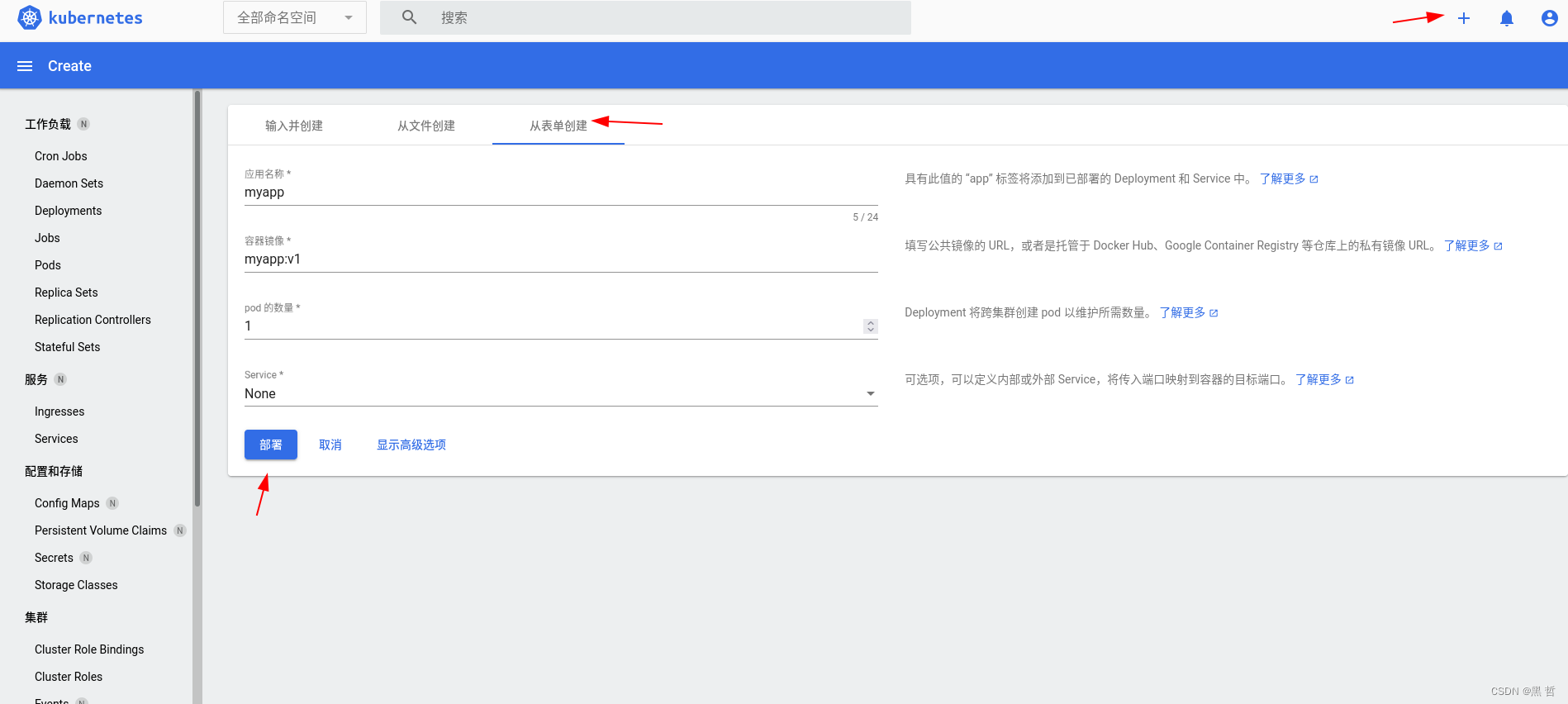

Dashboard可以给用户提供一个可视化的Web界面来查看当前集群的各种信息;用户可以用Kubernetes Dashboard部署容器化的应用、监控应用的状态、执行故障排查任务以及管理Kubernetes各种资源

网址:https://github.com/kubernetes/dashboard

下载部署文件:

[root@node22 ~]# mkdir dashboard

[root@node22 ~]# cd dashboard/

[root@node22 dashboard]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml

[root@node22 dashboard]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@node22 dashboard]# kubectl get ns

NAME STATUS AGE

default Active 11d

ingress-nginx Active 8d

kube-node-lease Active 11d

kube-public Active 11d

kube-system Active 11d

kubernetes-dashboard Active 20s

metallb-system Active 10d

nfs-client-provisioner Active 7d12h

test Active 8d

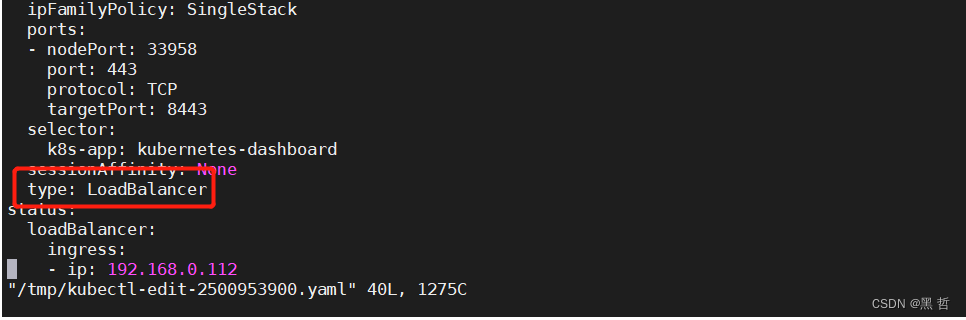

[root@node22 dashboard]# kubectl -n kubernetes-dashboard edit svc kubernetes-dashboard

service/kubernetes-dashboard edited

[root@node22 dashboard]# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.100.32.222 <none> 8000/TCP 3m37s

kubernetes-dashboard LoadBalancer 10.106.229.89 192.168.0.112 443:33958/TCP 3m38s

[root@node22 dashboard]# kubectl -n kubernetes-dashboard get secrets

NAME TYPE DATA AGE

default-token-j88k4 kubernetes.io/service-account-token 3 8m3s

kubernetes-dashboard-certs Opaque 0 8m3s

kubernetes-dashboard-csrf Opaque 1 8m3s

kubernetes-dashboard-key-holder Opaque 2 8m3s

kubernetes-dashboard-token-q72h6 kubernetes.io/service-account-token 3 8m3s

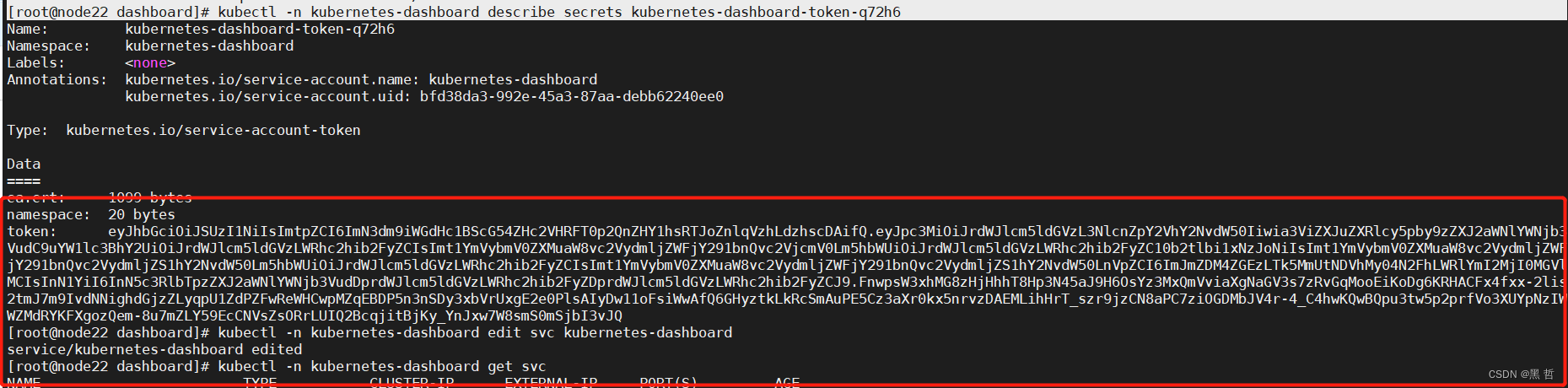

[root@node22 dashboard]# kubectl -n kubernetes-dashboard describe secrets kubernetes-dashboard-token-q72h6

查看登陆token

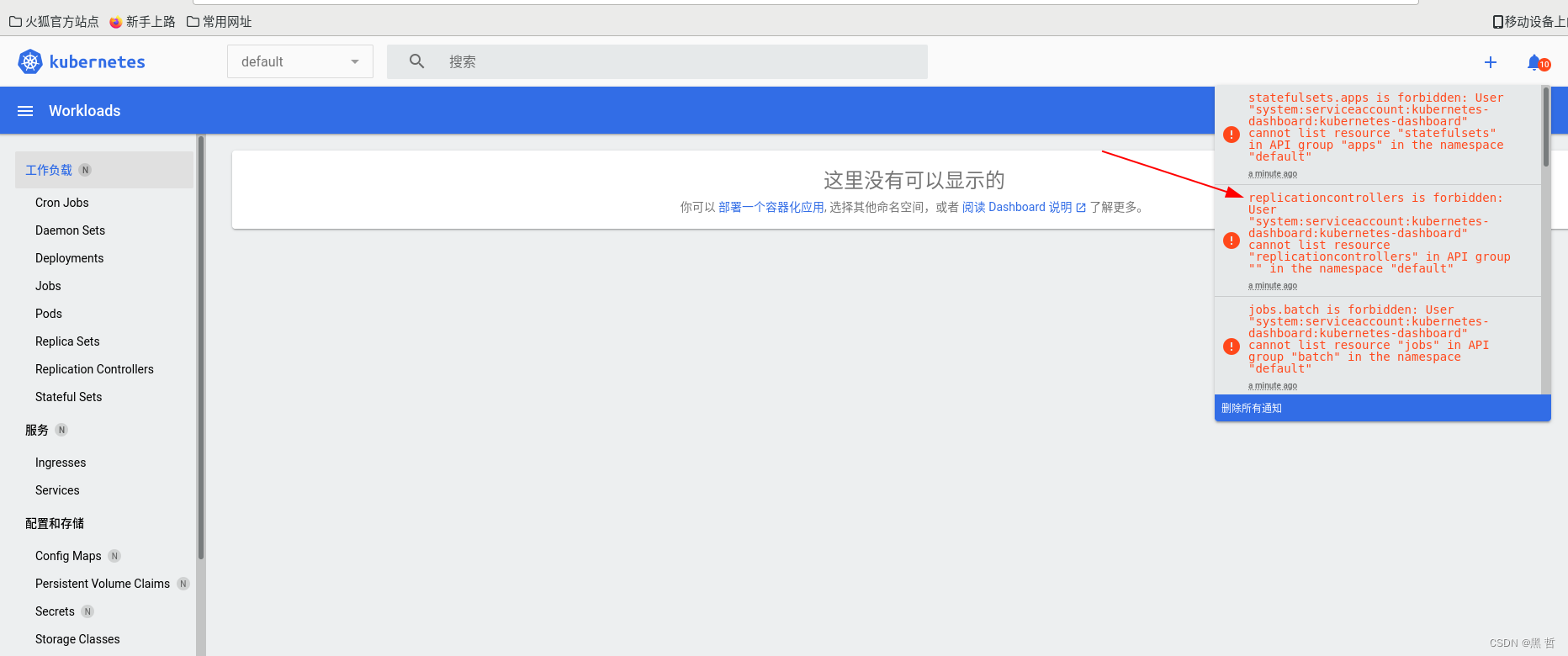

##默认kubernetes-dashboard这个serviceaccount对集群没有操作权限,通过rbac进行角色绑定授权

[root@node22 dashboard]# vim rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

[root@node22 dashboard]# kubectl apply -f rbac.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-admin created

##在浏览器刷新页面后即可查看到数据

3.Helm

Helm是Kubernetes应用的包管理工具,主要用来管理Charts,类似Linux系统的yum

Helm Chart是用来封装Kubernetes原生应用程序的一系列YAML文件,可以在你部署应用的时候自定义应用程序的一些Metadata,以便于应用程序的分发

对于应用发布者而言,可以通过Helm打包应用、管理应用依赖关系、管理应用版本并发布应用到软件仓库

对于使用者而言,使用Helm后不用需要编写复杂的应用部署文件,可以以简单的方式在Kubernetes上查找、安装、升级、回滚、卸载应用程序

Helm V3 与 V2 最大的区别在于去掉了tiller:

1).Helm当前最新版本 v3.1.0 官网:https://helm.sh/docs/intro/

Helm安装:

[root@node22 ~]# mkdir helm [root@node22 ~]# cd helm/ [root@node22 helm]# cp /root/helm-v3.9.0-linux-amd64.tar.gz . [root@node22 helm]# tar zxf helm-v3.9.0-linux-amd64.tar.gz [root@node22 helm]# ls helm-v3.9.0-linux-amd64.tar.gz linux-amd64 [root@node22 helm]# cd linux-amd64/ [root@node22 linux-amd64]# mv helm /usr/local/bin 2).设置helm命令补齐:

[root@node22 ~]# echo "source <(helm completion bash)" >> ~/.bashrc [root@node22 ~]# source .bashrccd 3).搜索官方helm hub chart库:

[root@node22 ~]# helm search hub nginx URL CHART VERSION APP VERSION DESCRIPTION https://artifacthub.io/packages/helm/mirantis/n... 0.1.0 1.16.0 A NGINX Docker Community based Helm chart for K... https://artifacthub.io/packages/helm/bitnami/nginx 13.2.3 1.23.1 NGINX Open Source is a web server that can be a... https://artifacthub.io/packages/helm/bitnami-ak... 13.2.1 1.23.1 NGINX Open Source is a web server that can be a... https://artifacthub.io/packages/helm/test-nginx... 0.1.0 1.16.0 A Helm chart for Kubernetes https://artifacthub.io/packages/helm/wiremind/n... 2.1.1 An NGINX HTTP server https://artifacthub.io/packages/helm/dysnix/nginx 7.1.8 1.19.4 Chart for the nginx server https://artifacthub.io/packages/helm/zrepo-test... 5.1.5 1.16.1 Chart for the nginx server https://artifacthub.io/packages/helm/cloudnativ... 3.2.0 1.16.0 Chart for the nginx server 4).Helm 添加第三方 Chart 库:

[root@node22 ~]# helm repo add bitnami https://charts.bitnami.com/bitnami 创建仓库 "bitnami" has been added to your repositories [root@node22 ~]# helm search repo nginx 查询 NAME CHART VERSION APP VERSION DESCRIPTION bitnami/nginx 13.2.3 1.23.1 NGINX Open Source is a web server that can be a... bitnami/nginx-ingress-controller 9.3.6 1.3.1 NGINX Ingress Controller is an Ingress controll... bitnami/nginx-intel 2.1.1 0.4.7 NGINX Open Source for Intel is a lightweight se... bitnami/kong 5.0.2 2.7.0 Kong is a scalable, open source API layer (aka ... 支持多种安装方式:(helm默认读取~/.kube/config信息连接k8s集群) •helm install redis-ha stable/redis-ha •helm install redis-ha redis-ha-4.4.0.tgz •helm install redis-ha path/redis-ha •helm install redis-ha https://example.com/charts/redis-ha-4.4.0.tgz •helm pull stable/redis-ha //拉取应用到本地 •helm status redis-ha //查看状态 •helm uninstall redis-ha //卸载 5).构建一个 Helm Chart:

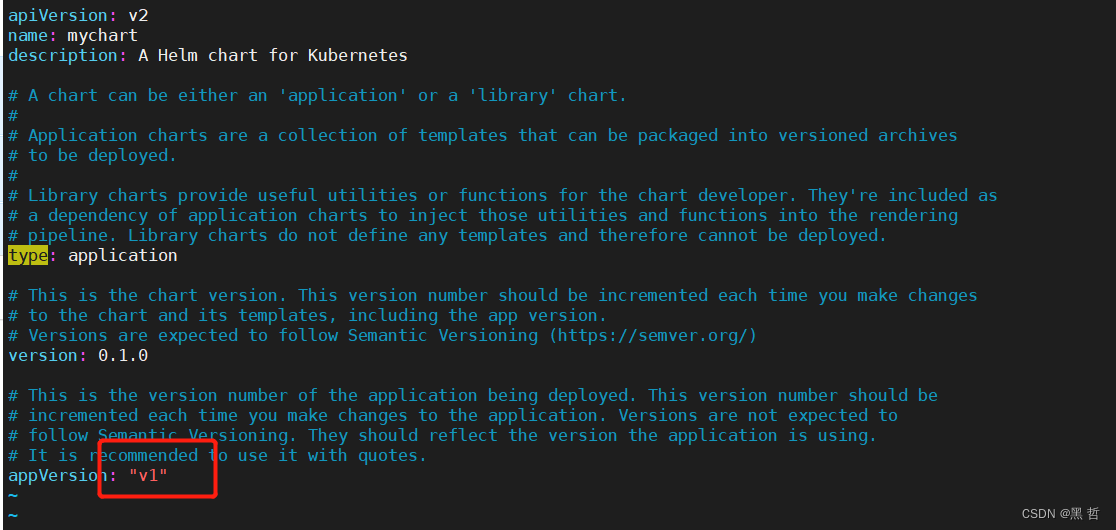

[root@node22 helm]# helm create mychart 创建mychart Creating mychart [root@node22 helm]# ls 出现一个mychart目录 helm-v3.9.0-linux-amd64.tar.gz linux-amd64 metrics-server metrics-server-3.8.2.tgz mychart nfs-client-provisioner nfs-client-provisioner-4.0.11.tgz [root@node22 helm]# cd mychart/ [root@node22 mychart]# ls 自动生成相应目录 charts Chart.yaml templates values.yaml [root@node22 mychart]# yum install -y tree 下载tree命令 [root@node22 mychart]# tree . 查看目录结构 . ├── charts ├── Chart.yaml ├── templates │ ├── deployment.yaml │ ├── _helpers.tpl │ ├── hpa.yaml │ ├── ingress.yaml │ ├── NOTES.txt │ ├── serviceaccount.yaml │ ├── service.yaml │ └── tests │ └── test-connection.yaml └── values.yaml 3 directories, 10 files 编写mychart的应用描述信息:

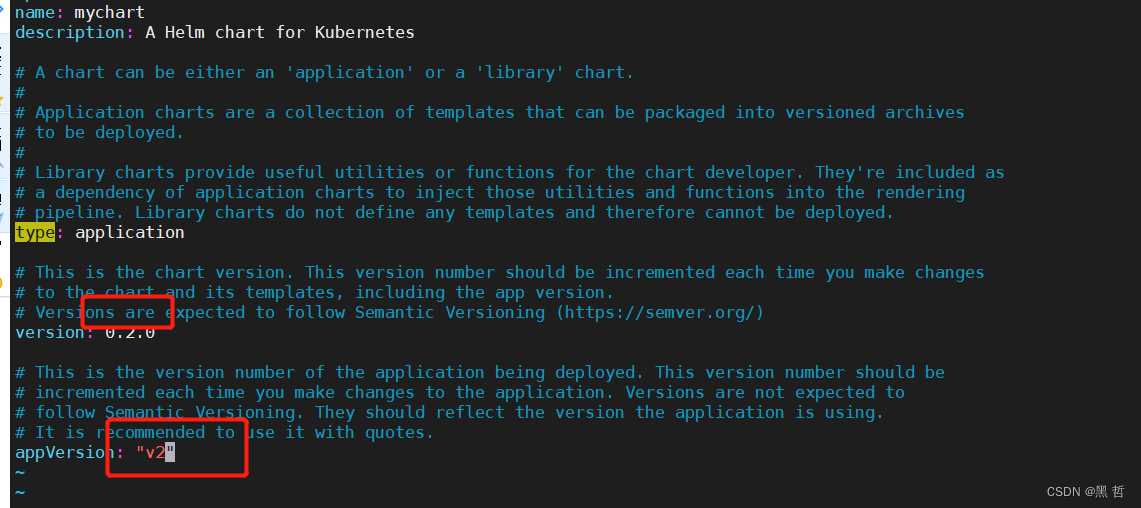

[root@node22 mychart]# vim Chart.yaml

编写应用部署信息:

[root@node22 ~]# cd ingress/

[root@node22 ingress]# ls

auth deployment-2.yaml deployment.yaml deploy.yaml ingress.yaml tls.crt tls.key

[root@node22 ingress]# kubectl delete -f . 删除之前部署的ingress-ngibx

[root@node22 ingress]# cd

[root@node22 ~]# cd helm/

[root@node22 helm]# helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

"ingress-nginx" has been added to your repositories 创建仓库

[root@node22 helm]# helm pull ingress-nginx/ingress-nginx 拉取镜像

[root@node22 helm]# tar zxf ingress-nginx-4.2.3.tgz

[root@node22 helm]# cd ingress-nginx/

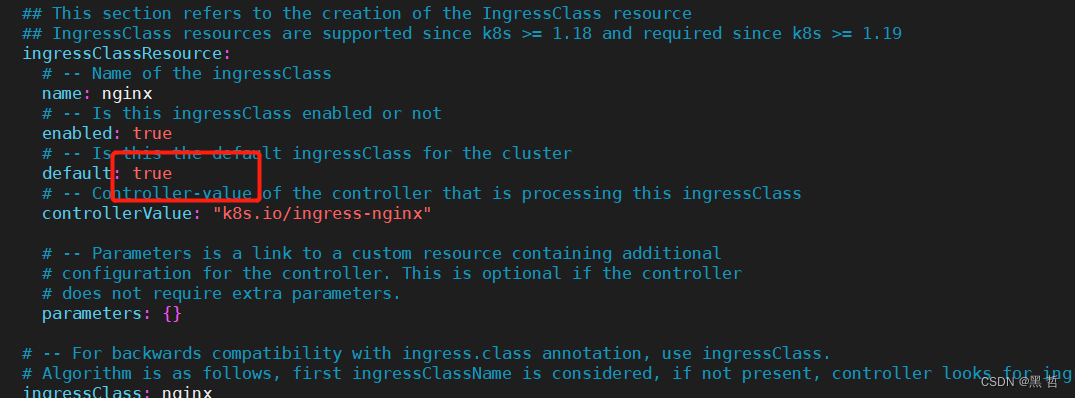

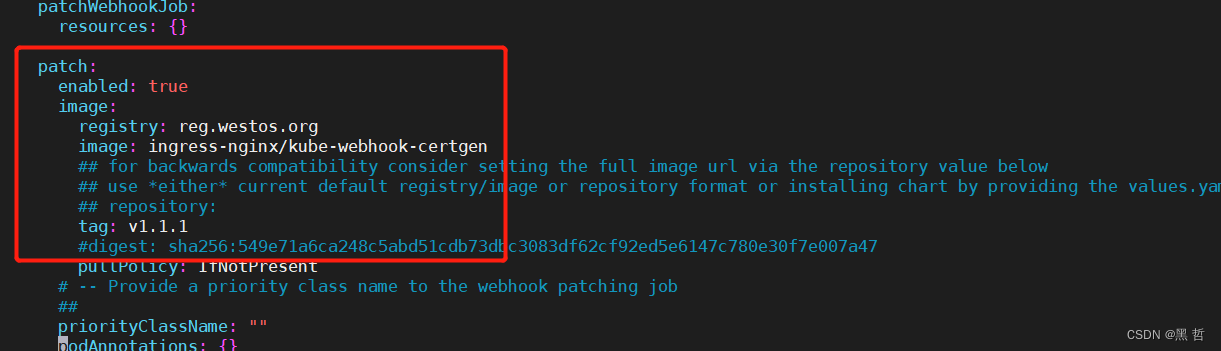

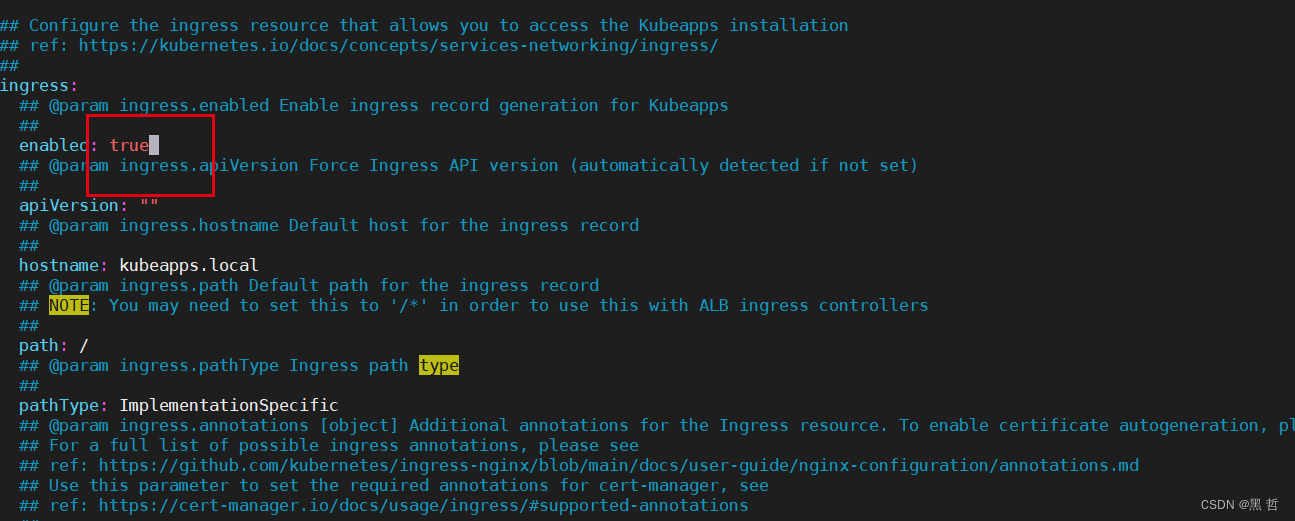

[root@node22 ingress-nginx]# vim values.yaml

[root@node22 ingress-nginx]# kubectl create ns ingress-nginx

5).Helm部署nfs-client-provisioner:

删除之前的布置:

[root@node22 ~]# cd nfs

[root@node22 nfs]# kubectl delete -f . 不知道应用了哪个yaml文件就全部删掉

[root@node22 ~]# kubectl get pod -A 已经被回收

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx ingress-nginx-controller-5bbfbbb9c7-vxdtr 1/1 Running 0 8d

kube-flannel kube-flannel-ds-2wf6n 1/1 Running 0 155m

kube-flannel kube-flannel-ds-h7fvp 1/1 Running 0 155m

kube-flannel kube-flannel-ds-rvhfp 1/1 Running 0 155m

kube-system coredns-7b56f6bc55-2pwnh 1/1 Running 3 (7d23h ago) 11d

kube-system coredns-7b56f6bc55-g458w 1/1 Running 3 (7d23h ago) 11d

kube-system etcd-node22 1/1 Running 3 (7d23h ago) 11d

kube-system kube-apiserver-node22 1/1 Running 2 (7d23h ago) 10d

kube-system kube-controller-manager-node22 1/1 Running 17 (7d ago) 11d

kube-system kube-proxy-8qc8h 1/1 Running 8 (<invalid> ago) 10d

kube-system kube-proxy-cscgp 1/1 Running 2 (7d23h ago) 10d

kube-system kube-proxy-zh89l 1/1 Running 0 10d

kube-system kube-scheduler-node22 1/1 Running 16 (7d ago) 11d

kubernetes-dashboard dashboard-metrics-scraper-799d786dbf-sdll7 1/1 Running 0 174m

kubernetes-dashboard kubernetes-dashboard-546cbc58cd-sct28 1/1 Running 0 174m

metallb-system controller-5c97f5f498-fvg5p 1/1 Running 1 (<invalid> ago) 8d

metallb-system speaker-2mlfr 1/1 Running 32 (<invalid> ago) 10d

metallb-system speaker-jkh2b 1/1 Running 12 (7d ago) 10d

metallb-system speaker-s66q5 1/1 Running 2 (<invalid> ago) 10d

• 预先配置好外部的NFS服务器

[root@node22 ~]# helm repo add kubesphere https://charts.kubesphere.io/main

"kubesphere" has been added to your repositories 创建仓库

[root@node22 ~]# helm repo list查看所有仓库

NAME URL

bitnami https://charts.bitnami.com/bitnami

kubesphere https://charts.kubesphere.io/main

[root@node22 ~]# helm search repo nfs-client 查询nfs-client-provisioner

NAME CHART VERSION APP VERSION DESCRIPTION

kubesphere/nfs-client-provisioner 4.0.11 4.0.2 nfs-client is an automatic provisioner that use...

[root@node22 helm]# helm pull kubesphere/nfs-client-provisioner 拉取包(默认最新)

[root@node22 helm]# tar zxf nfs-client-provisioner-4.0.11.tgz 解压

[root@node22 helm]# cd nfs-client-provisioner/

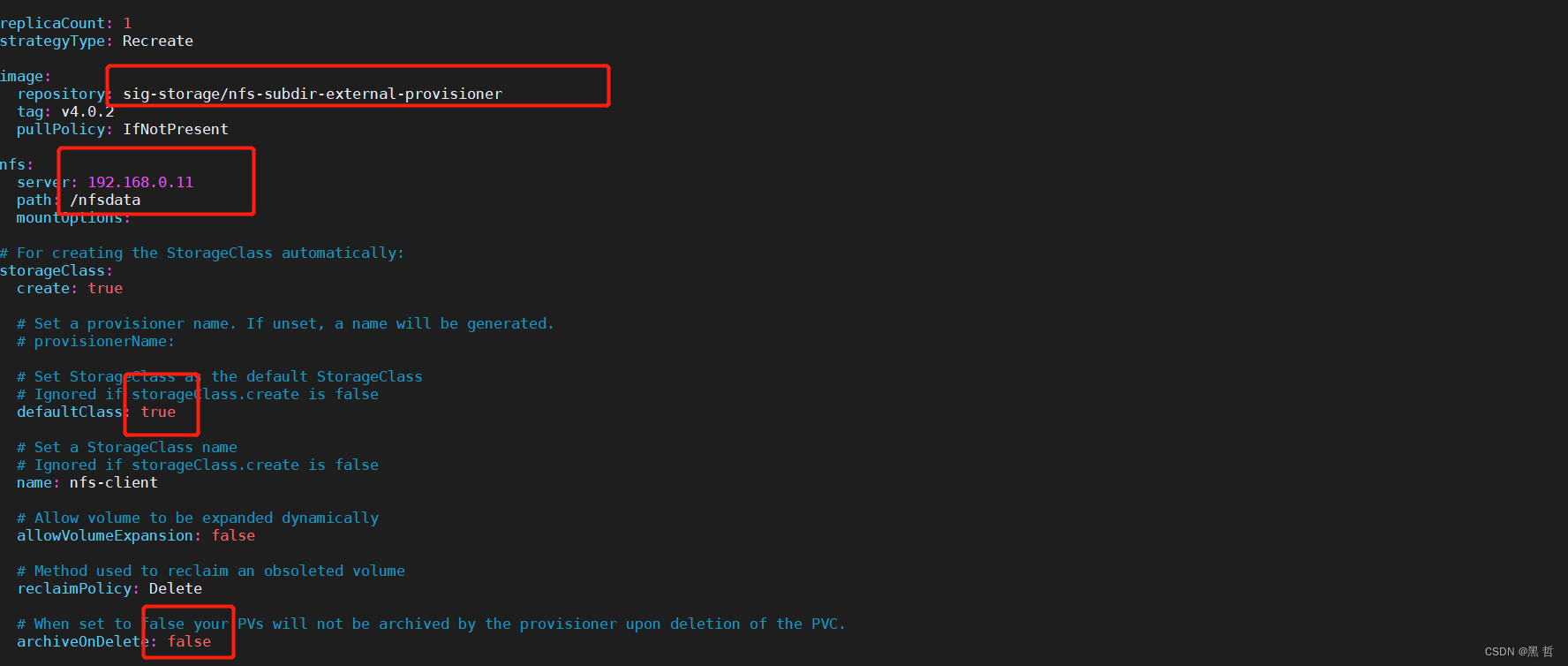

[root@node22 nfs-client-provisioner]# vim values.yaml 修改部署文件

[root@node22 nfs-client-provisioner]# helm -n nfs-client-provisioner install nfs-client-provisioner . 安装nfs-client-provisioner,通过当前目录下的yaml文件

NAME: nfs-client-provisioner

LAST DEPLOYED: Mon Sep 5 16:23:52 2022

NAMESPACE: nfs-client-provisioner

STATUS: deployed

REVISION: 1

TEST SUITE: None

[root@node22 nfs-client-provisioner]# helm list -A 查看

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nfs-client-provisioner nfs-client-provisioner 1 2022-09-05 16:23:52.924963975 +0800 CST deployed nfs-client-provisioner-4.0.11 4.0.2

[root@node22 nfs-client-provisioner]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-client-provisioner1 Delete Immediate false 2m41s

[root@node22 ~]# cd nfs/

[root@node22 nfs]# kubectl apply -f pvc.yaml

persistentvolumeclaim/test-claim created

[root@node11 harbor]# cd /nfsdata 回收时被删掉

[root@node11 nfsdata]# ls

default-data-mysql-0-pvc-1b48f075-3d3d-4ee9-a1ca-97b5b2792208 index.html pv1 pv2 pv3

6).Helm部署metrics-server应用:

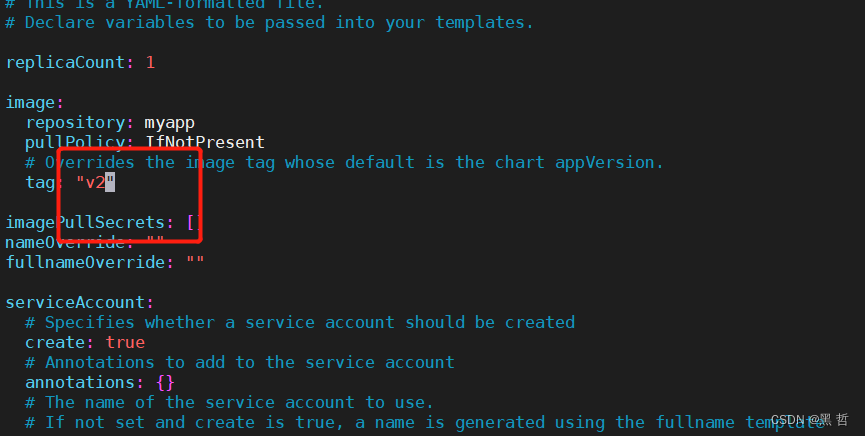

[root@node22 metrics]# kubectl delete -f components.yaml [root@node22 helm]# helm repo add metrics-server https://kubernetes-sigs.github.io/metrics-server/创建仓库 "metrics-server" has been added to your repositories [root@node22 helm]# helm pull metrics-server/metrics-server 拉取源 [root@node22 helm]# tar zxf metrics-server-3.8.2.tgz [root@node22 helm]# cd metrics-server/ [root@node22 metrics-server]# vim values.yaml [root@node22 metrics-server]# helm -n kube-system install metrics-server . 下载成功 NAME: metrics-server LAST DEPLOYED: Mon Sep 5 16:51:33 2022 NAMESPACE: kube-system STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: *********************************************************************** * Metrics Server * *********************************************************************** Chart version: 3.8.2 App version: 0.6.1 Image tag: metrics-server/metrics-server:v0.6.1 *********************************************************************** [root@node22 ingress]# cd [root@node22 ~]# cd helm/ [root@node22 helm]# cd mychart/ [root@node22 mychart]# vim values.yaml [root@node22 ~]# cd helm/ 7).将应用打包

[root@node22 helm]# helm package mychart 将应用打包

Successfully packaged chart and saved it to: /root/helm/mychart-0.1.0.tgz

8).建立本地charts仓库

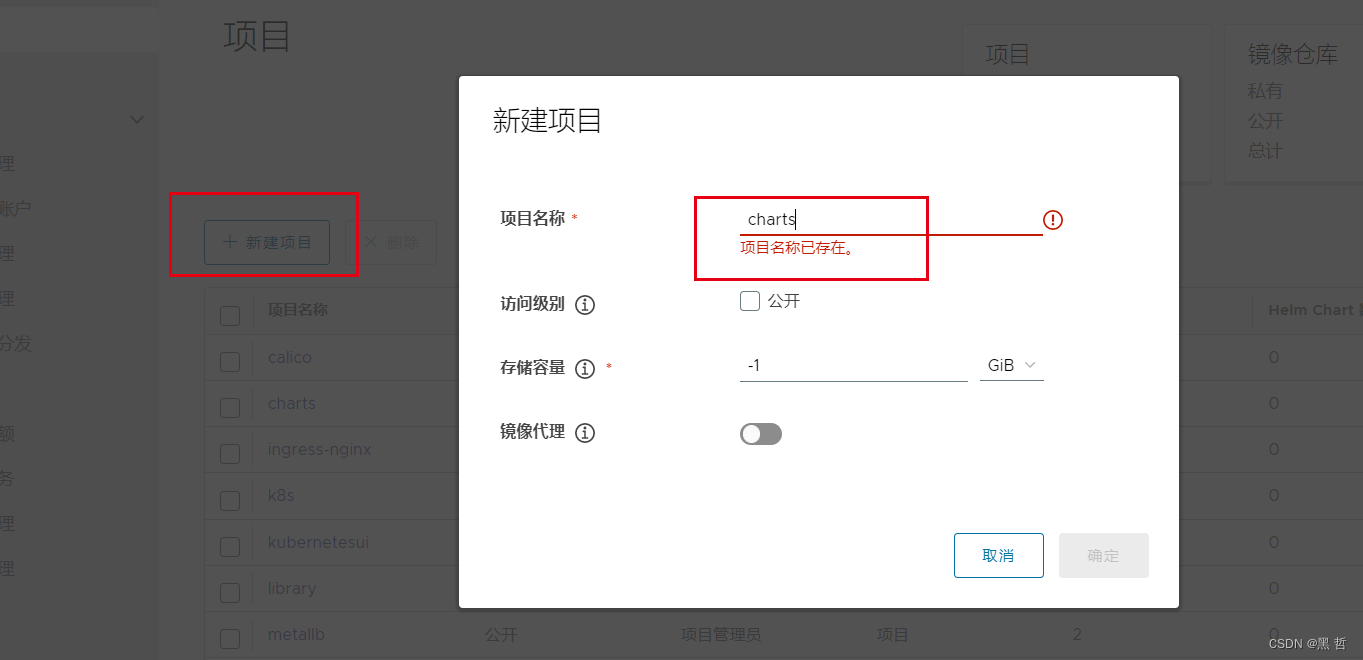

9).添加本地私有仓库

[root@node22 helm]# cd /etc/docker/certs.d/reg.westos.org/ [root@node22 reg.westos.org]# cp ca.crt /etc/pki/ca-trust/source/anchors/解决证书问题 [root@node22 ~]# update-ca-trust更新信任证书 [root@node22 ~]# helm repo add local http://reg.westos.org/chartrepo/charts "local" has been added to your repositories添加本地私有仓库 10).安装helm-push插件

[root@node22 ~]# helm env 获取目录 HELM_BIN="helm" HELM_CACHE_HOME="/root/.cache/helm" HELM_CONFIG_HOME="/root/.config/helm" HELM_DATA_HOME="/root/.local/share/helm" HELM_DEBUG="false" HELM_KUBEAPISERVER="" HELM_KUBEASGROUPS="" HELM_KUBEASUSER="" HELM_KUBECAFILE="" HELM_KUBECONTEXT="" HELM_KUBETOKEN="" HELM_MAX_HISTORY="10" HELM_NAMESPACE="default" HELM_PLUGINS="/root/.local/share/helm/plugins" HELM_REGISTRY_CONFIG="/root/.config/helm/registry/config.json" HELM_REPOSITORY_CACHE="/root/.cache/helm/repository" HELM_REPOSITORY_CONFIG="/root/.config/helm/repositories.yaml" [root@node22 ~]# mkdir -p /root/.local/share/helm/plugins 创建目录 [root@node22 ~]# cd /root/.local/share/helm/plugins [root@node22 plugins]# mkdir helm-push [root@node22 helm]# tar zxf helm-push_0.10.2_linux_amd64.tar.gz -C ~/.local/share/helm/plugins/helm-push [root@node22 helm-push]# helm plugin list NAME VERSION DESCRIPTION cm-push 0.10.1 Push chart package to ChartMuseum 11).上传

[root@node22 helm]# helm cm-push mychart-0.1.0.tgz local 上传mychart到私有仓库

存在认证问题

[root@node22 helm]# helm cm-push mychart-0.1.0.tgz local -u admin -p westos

Pushing mychart-0.1.0.tgz to local... 解决问题

Done.

[root@node22 helm]# helm search repo mychart 无法search到

No results found

[root@node22 helm]# helm repo update local 更新local仓库

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@node22 helm]# helm search repo mychart

NAME CHART VERSION APP VERSION DESCRIPTION

local/mychart 0.1.0 v1 A Helm chart for Kubernetes

[root@node22 helm]# helm search repo mychart

NAME CHART VERSION APP VERSION DESCRIPTION

local/mychart 0.1.0 v1 A Helm chart for Kubernetes

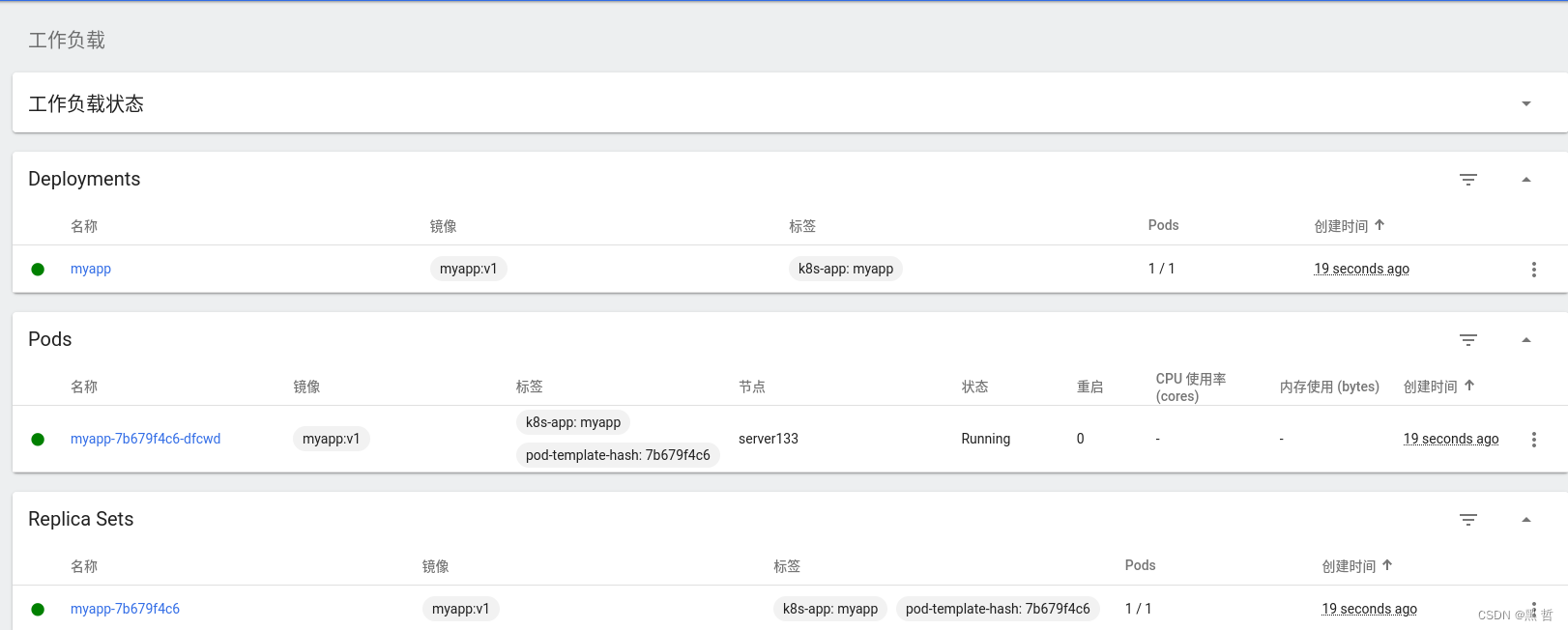

[root@node22 helm]# helm install myapp local/mychart 下载

NAME: myapp

LAST DEPLOYED: Tue Sep 6 04:30:07 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get the application URL by running these commands:

http://myapp.westos.org/

12).升级和回滚:

[root@node22 helm]# cd mychart/

[root@node22 mychart]# vim Chart.yaml

[root@node22 mychart]# vim values.yaml

[root@node22 mychart]# cd ..

[root@node22 helm]# helm package mychart

Successfully packaged chart and saved it to: /root/helm/mychart-0.2.0.tgz

[root@node22 helm]# helm cm-push mychart-0.2.0.tgz local -u admin -p westos

Pushing mychart-0.2.0.tgz to local...

Done.

[root@node22 helm]# helm repo update local 更新

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "local" chart repository

Update Complete. ⎈Happy Helming!⎈

[root@node22 helm]# helm search repo mychart 查看

NAME CHART VERSION APP VERSION DESCRIPTION

local/mychart 0.2.0 v2 A Helm chart for Kubernetes

[root@node22 helm]# helm upgrade myapp local/mychart 升级

Release "myapp" has been upgraded. Happy Helming!

NAME: myapp

LAST DEPLOYED: Tue Sep 6 04:36:45 2022

NAMESPACE: default

STATUS: deployed

REVISION: 2

NOTES:

1. Get the application URL by running these commands:

http://myapp.westos.org/

回滚:

[root@node22 helm]# helm rollback myapp 1 回滚到1版本

Rollback was a success! Happy Helming!

[root@node22 helm]# helm history myapp 查看历史版本

REVISION UPDATED STATUS CHART APP VERSION DESCRIPTION

1 Tue Sep 6 04:30:07 2022 superseded mychart-0.1.0 v1 Install complete

2 Tue Sep 6 04:36:45 2022 superseded mychart-0.2.0 v2 Upgrade complete

3 Tue Sep 6 04:39:03 2022 deployed mychart-0.1.0 v1 Rollback to 1

[root@node22 helm]# helm uninstall myapp 删除myapp

release "myapp" uninstalled

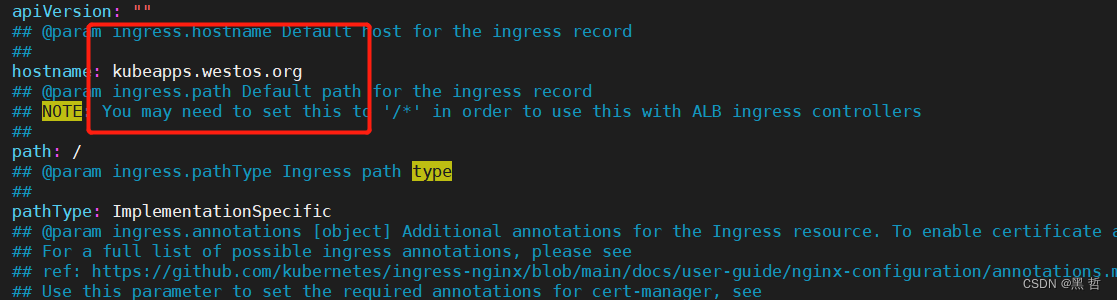

12).部署kubeapps应用,为Helm提供web UI界面管理:

[root@node22 helm]# helm pull bitnami/kubeapps --version 8.1.11

[root@node22 helm]# tar zxf kubeapps-8.1.11.tgz

[root@node22 helm]# cd kubeapps/

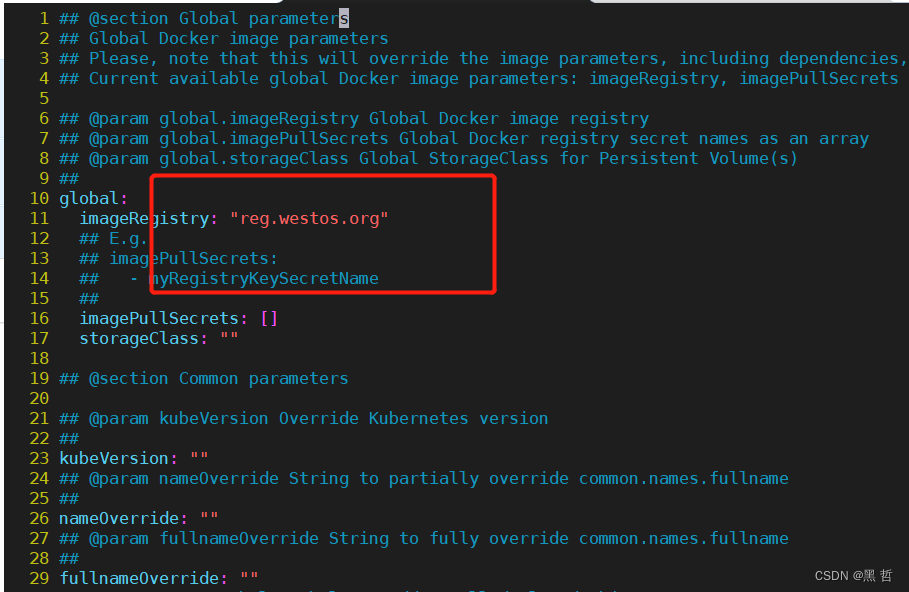

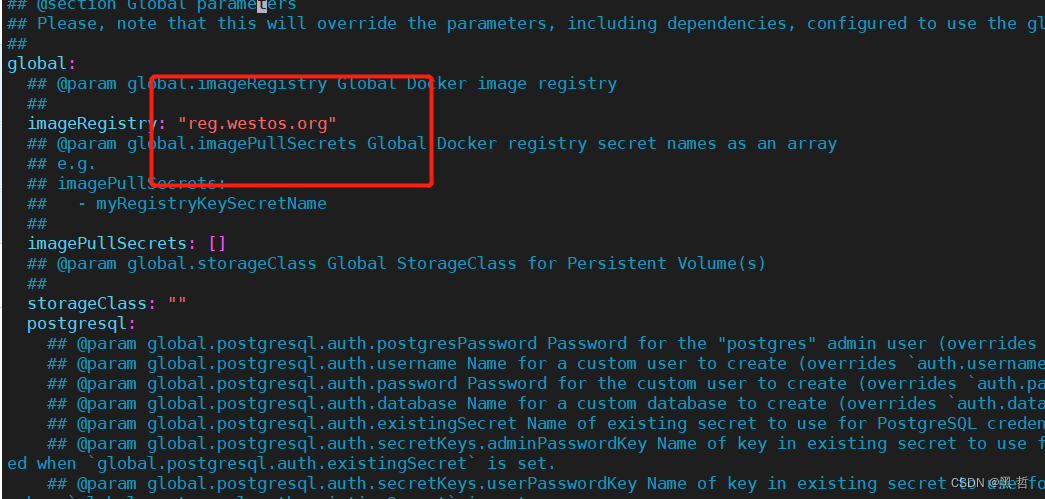

[root@node22 kubeapps]# vim values.yaml

[root@node22 charts]# ls

common postgresql redis

[root@node22 charts]# cd postgresql/

[root@node22 postgresql]# vim values.yaml

[root@node22 kubeapps]# kubectl create namespace kubeapps 创建ns

namespace/kubeapps created

[root@node22 kubeapps]# helm -n kubeapps install kubeapps . 下载

[root@node22 kubeapps]# kubectl get pod -n kubeapps

NAME READY STATUS RESTARTS AGE

apprepo-kubeapps-sync-bitnami-8bp6s-rgp76 1/1 Running 0 4m46s

kubeapps-5c9f6f9f78-qwccl 1/1 Running 0 10m

kubeapps-5c9f6f9f78-xpchk 1/1 Running 0 10m

kubeapps-internal-apprepository-controller-578d9cbfb4-7fskh 1/1 Running 0 10m

kubeapps-internal-dashboard-76d4f8678b-r7st6 1/1 Running 0 10m

kubeapps-internal-dashboard-76d4f8678b-ttd5k 1/1 Running 0 10m

kubeapps-internal-kubeappsapis-5ff75b9686-2btdw 1/1 Running 0 10m

kubeapps-internal-kubeappsapis-5ff75b9686-st8mm 1/1 Running 0 10m

kubeapps-internal-kubeops-798b96fc-8w6zx 1/1 Running 0 10m

kubeapps-internal-kubeops-798b96fc-tbvsh 1/1 Running 0 10m

kubeapps-postgresql-0 1/1 Running 0 10m

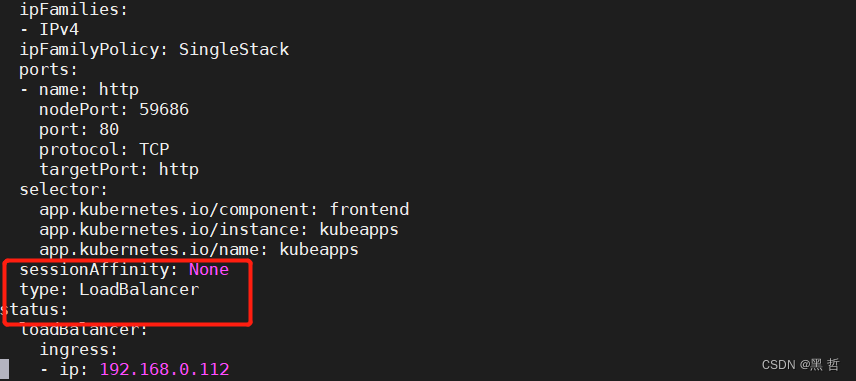

[root@node22 kubeapps]# kubectl -n kubeapps edit svc kubeapps

service/kubeapps edited

[root@node22 kubeapps]# kubectl get svc -n kubeapps

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubeapps LoadBalancer 10.99.251.221 192.168.0.112 80:59686/TCP 14m

kubeapps-internal-dashboard ClusterIP 10.105.13.222 <none> 8080/TCP 14m

kubeapps-internal-kubeappsapis ClusterIP 10.108.2.177 <none> 8080/TCP 14m

kubeapps-internal-kubeops ClusterIP 10.103.206.129 <none> 8080/TCP 14m

kubeapps-postgresql ClusterIP 10.108.191.73 <none> 5432/TCP 14m

kubeapps-postgresql-hl ClusterIP None <none> 5432/TCP 14m

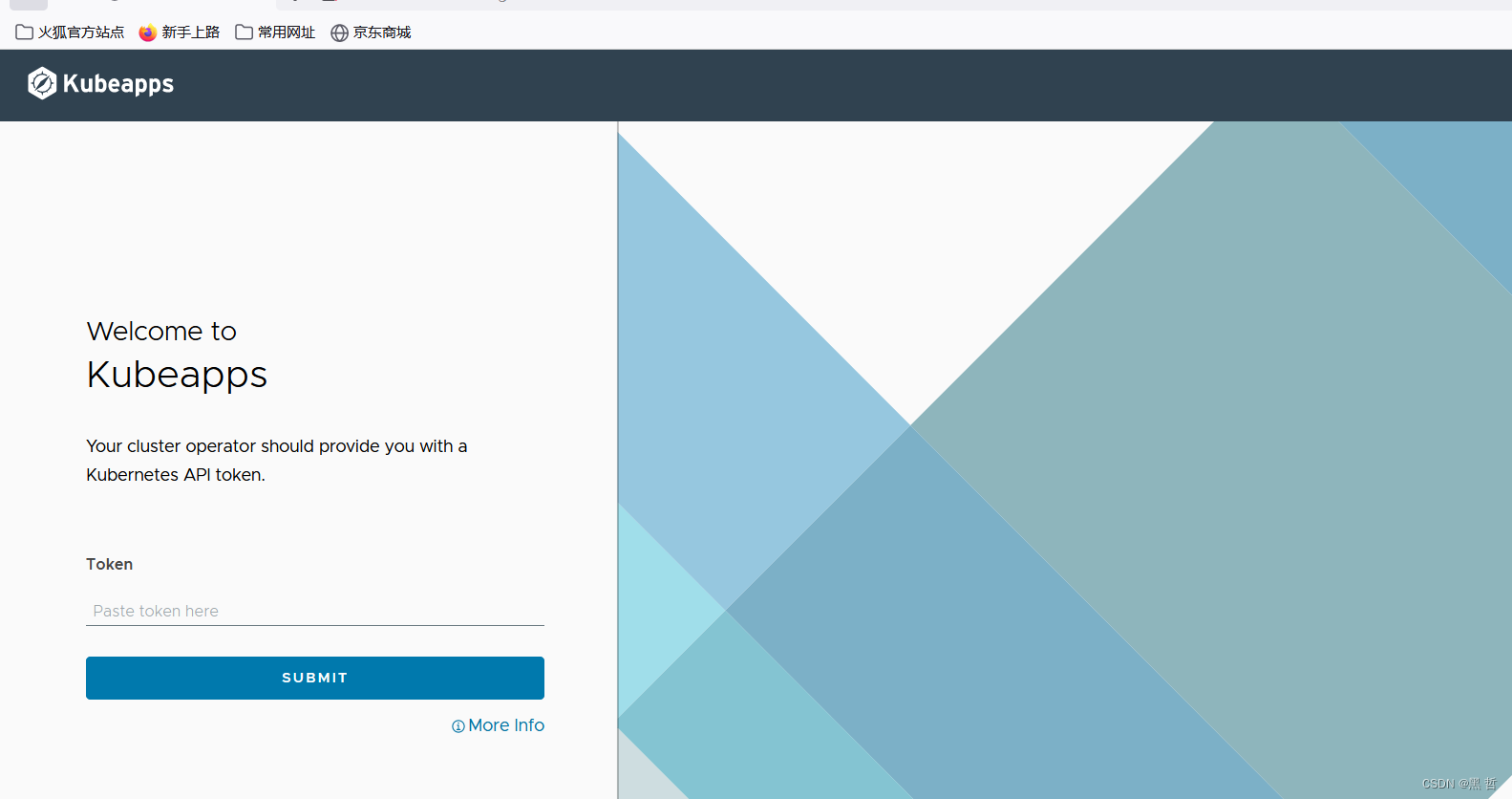

访问kubeapps的dashboard: 使用192.168.0.112访问

[root@node22 kubeapps]# kubectl create serviceaccount kubeapps-operator -n kubeapps

serviceaccount/kubeapps-operator created

[root@node22 kubeapps]# kubectl create clusterrolebinding kubeapps-operator --clusterrole=cluster-admin -- serviceaccount=kubeapps:kubeapps-operator

clusterrolebinding.rbac.authorization.k8s.io/kubeapps-operator created

[root@node22 kubeapps]# kubectl -n kubeapps get sa

NAME SECRETS AGE

default 1 23m

kubeapps-internal-apprepository-controller 1 22m

kubeapps-internal-kubeappsapis 1 22m

kubeapps-internal-kubeops 1 22m

kubeapps-operator 1 27s

[root@node22 kubeapps]# kubectl -n kubeapps get secrets

NAME TYPE DATA AGE

default-token-8ln77 kubernetes.io/service-account-token 3 23m

kubeapps-internal-apprepository-controller-token-5mfd8 kubernetes.io/service-account-token 3 22m

kubeapps-internal-kubeappsapis-token-stbpw kubernetes.io/service-account-token 3 22m

kubeapps-internal-kubeops-token-hrn6b kubernetes.io/service-account-token 3 22m

kubeapps-operator-token-qx5jz kubernetes.io/service-account-token 3 35s

kubeapps-postgresql Opaque 1 22m

sh.helm.release.v1.kubeapps.v1 helm.sh/release.v1 1 22m

以上内容由“WiFi之家网”整理收藏!。

原创文章,作者:192.168.1.1,如若转载,请注明出处:https://www.224m.com/232167.html